A powerful current sweeps through K12 education – Generative AI. Yet, beneath its promising surface, familiar tides of challenge and concern stir, reminiscent of every major technological wave before it. Tools like ChatGPT are transforming the way students learn, teachers teach, and schools operate. But for many experienced K12 educators, there’s a distinct feeling of déjà vu. Haven’t we been here before?

Remember when computers first promised to be “the great equalizer” in classrooms? Or when the internet was going to democratize access to knowledge for everyone? Each new wave of technology brought immense promise, often coupled with unforeseen (yet predictable) challenges.

The Enduring Promise: “Technology Will Fix It!”

From the 1980s personal computer boom to the internet age, new tech was consistently hailed as the key to unlocking educational equity. The promise then was about access to information – a computer in every classroom, the world at students’ fingertips. Today, with AI, the promise has evolved: it’s about hyper-personalized learning, where AI tutors adapt lessons to each child’s unique needs, freeing up teachers for more impactful work.

Both eras share a core belief: technology can level the playing field. But history shows it’s rarely that simple.

Unintended Consequences: Similar Problems?

The Persistent Digital Divide (Now with a “Human-AI” Twist)

Then: Unequal access to computers and the internet created the “digital divide.” Students without home computers or reliable internet were left behind.

Now: AI tools demand consistent, high-quality internet and up-to-date devices. If access remains uneven, AI will widen existing gaps. Even more concerning is the risk of a “human-AI divide,” where wealthier schools offer both human teachers AND AI, while under-resourced schools might rely heavily on AI, potentially reducing vital human-to-human interaction and holistic development. Simply put: basic access isn’t enough; it’s about quality of access and meaningful use.

Academic Integrity Under Siege (The AI “Arms Race”)

Then: Calculators, then the internet, forced educators to rethink plagiarism and assessment.

Now: AI can generate sophisticated essays and code in moments, making “ghostwritten assignments” a major concern. This blurs the lines of authorship – if an AI writes it, who’s the author? The current “arms race” between AI generation and detection tools is draining resources and fostering distrust, often preventing us from focusing on deeper learning.

Bias at Algorithmic Scale

Then: Textbooks and software could subtly carry societal biases.

Now: AI learns from vast datasets. If these datasets reveal bias, AI will perpetuate and even amplify those biases. This means AI could unintentionally favor certain groups or stereotype marginalized students on a massive scale, impacting learning paths, recommendations, and even evaluations. Unlike human bias, algorithmic bias can spread instantly and invisibly, creating systemic inequity.

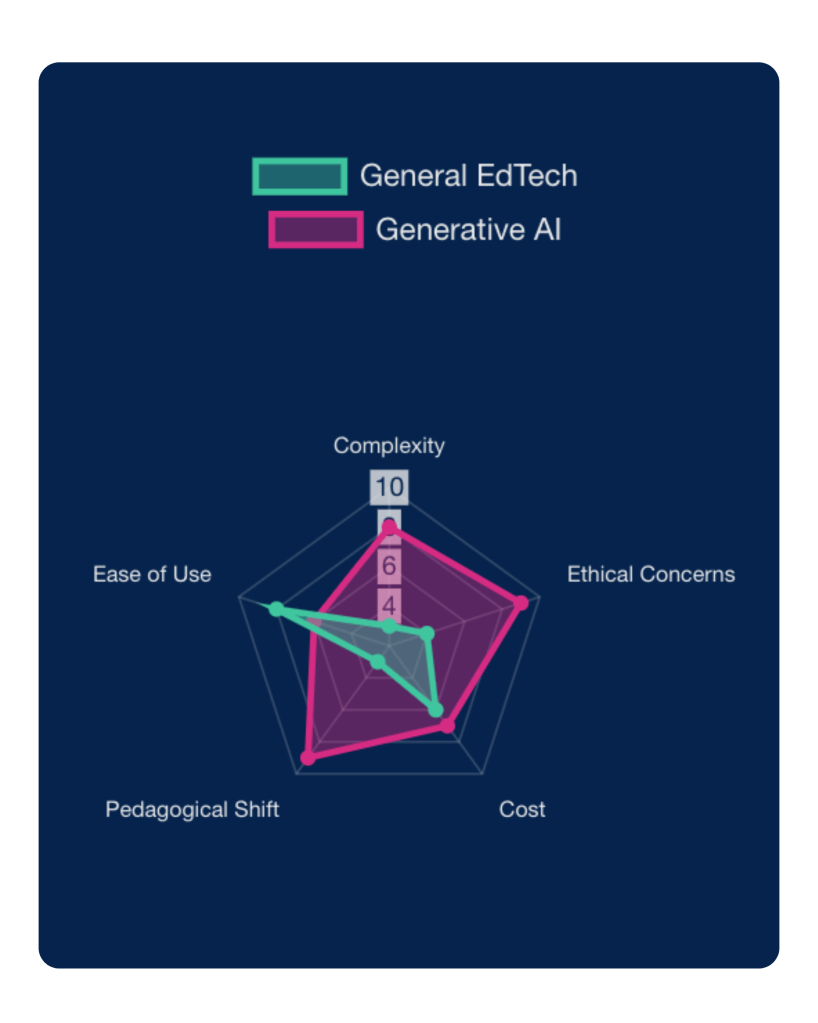

Compared to general EdTech, AI tools are perceived as more complex and present far greater ethical concerns around bias, privacy, and transparency. This necessitates a fundamental shift in pedagogy—not just new tools, but new ways of teaching, learning, and assessing knowledge.

▲ Higher Complexity & Cost

▲ Amplified Ethical Risks

▲ Deeper Pedagogical Shift Required

What’s Different This Time? AI’s Amplified Challenges

While the parallels are striking, AI isn’t just “more of the same.” It introduces new complexities:

- Higher Complexity: AI tools are often harder for educators to understand and integrate effectively, leading to increased anxiety.

- Amplified Ethical Concerns: Data privacy, algorithmic bias, and the “black box” nature of AI decision-making are far more significant.

- Deeper Pedagogical Shift: AI isn’t just a new tool; it demands a fundamental rethinking of how we teach, learn, and assess. It’s not about replacing teachers but transforming the very nature of instruction.

What’s Different This Time? AI’s Amplified Challenges

As I look ahead to the new school year and think about how to avoid repeating history while truly harnessing AI’s potential, I know we need a proactive, human-centered approach. This isn’t just about what “they” (administrators, policymakers) need to do, but also what “we” (teachers) can do in our classrooms every day.

Discussion Questions for Educators

Looking to get a conversation started with your team? Consider the following questions, designed to facilitate reflection and conversation amongst educators about the integration of AI in K-12 education.

- How do the historical “promises” of technology (like computers as the “great equalizer”) resonate with or differ from the current promises of AI in your school or district?

- Which of the “unintended consequences” (Digital Divide, Academic Integrity, Bias) do you feel is the most pressing concern in your current teaching context, and why?

- Considering the “amplified challenges” of AI (complexity, ethical risks, pedagogical shift), what is one specific area where you feel you need more support or training as an educator?

- Looking at the “Practical Steps” for teachers, which action do you feel is most immediately actionable in your classroom, and what might be a small first step you could take?

- How can teachers and leaders collaborate more effectively to address the challenges and leverage the opportunities presented by AI in our school community?

- What is one question or concern about AI in education that you still have after reading this, and how might we explore it further as a team?